Overview

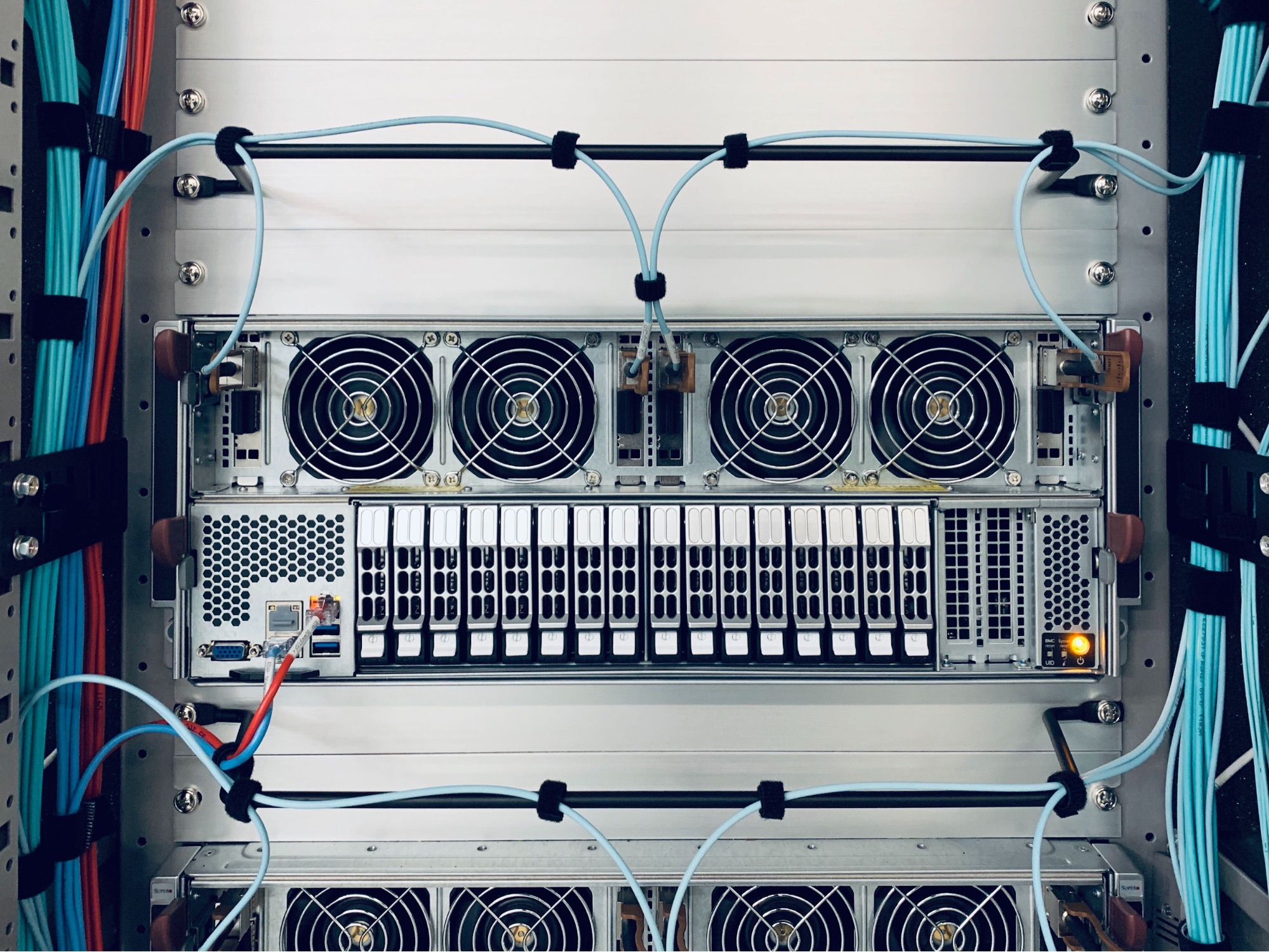

Computing infrastructure powering problem-solving capabilities of deep learning

Preferred Networks (PFN)’s core technologies, especially deep learning, require enormous computing power. To perform a vast number of computations efficiently, we currently operate our own computer clusters (more commonly known as supercomputers). Our computer clusters are named MN followed by a series number: MN-1, MN-2 and MN-3.

Infrastructure

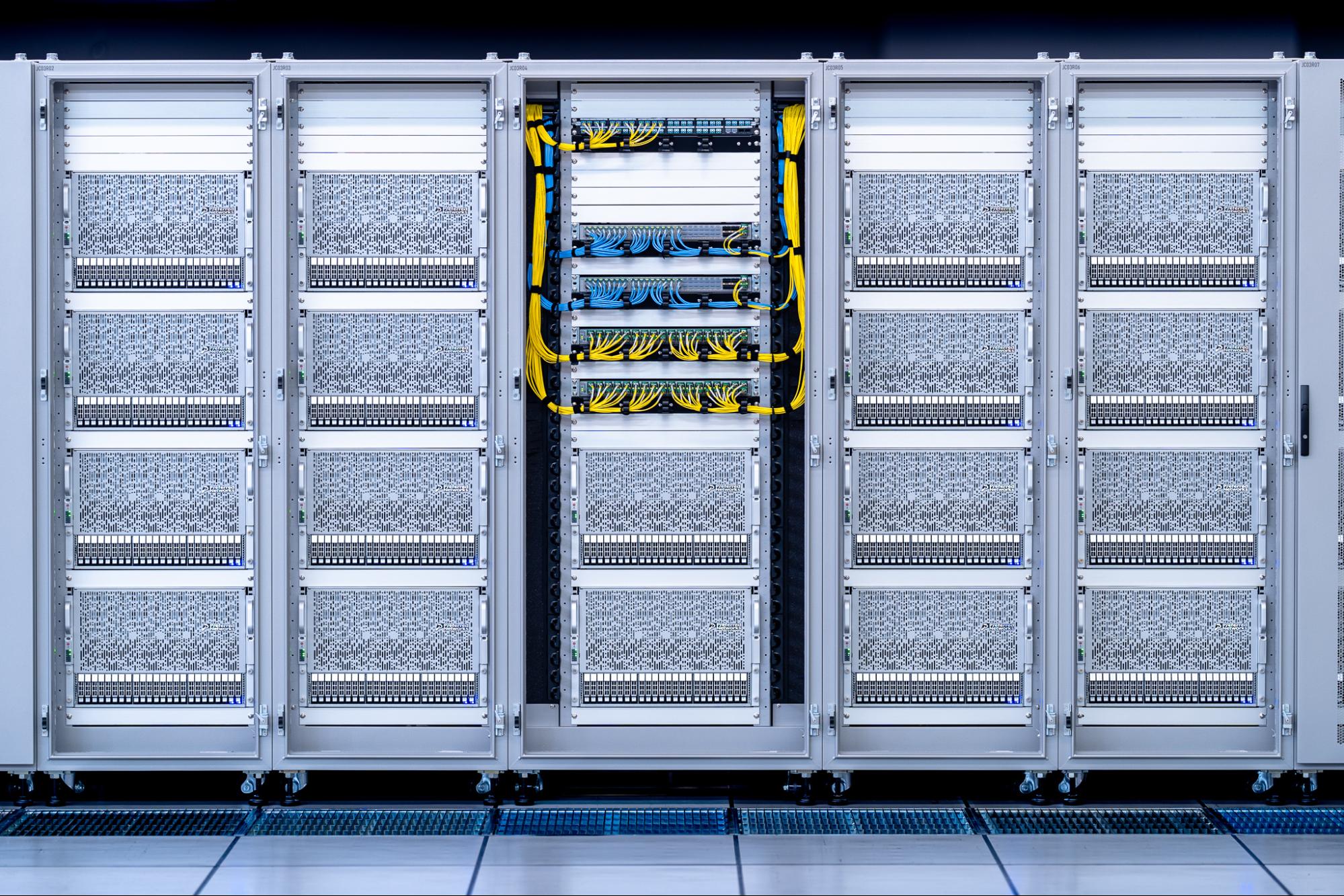

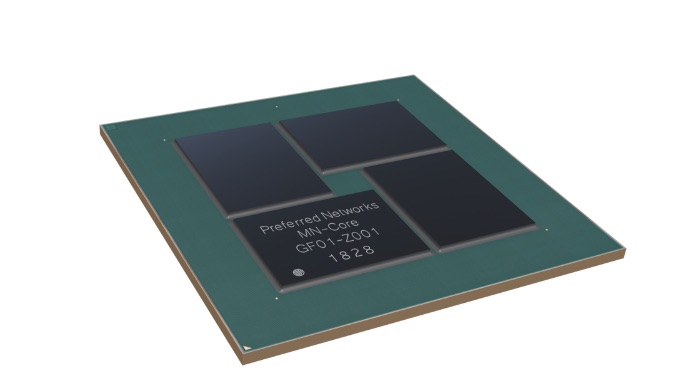

MN-3

Operating since May 2020, MN-3 is PFN’s third-generation computer cluster that uses MN-Core, a highly efficient custom processor co-developed by PFN and Kobe University specifically for use in deep learning. PFN is currently working to increase MN-3's computational speed for practical deep learning workloads. MN-3 topped the Green500 list of the world's most energy-efficient supercomputers three times in June 2020, June 2021 and November 2021.

Systems used for Green500 measurement and their respective performance

| November 2021 | June 2021 | November 2020 | June 2020 | |

| Nodes | 32 | 40 | ||

| MN-Core processors | 128 | 160 | ||

| CPU (Intel Xeon) cores | 1,536 | 1,920 | ||

| Peak performance (theoretical, under given standard) | 3.390 PFlops | 3.138 PFlops | 3.92 PFlops | |

| HPL benchmark | 2.181 PFlops | 1.822 PFlops | 1.653 PFlops | 1.621 PFlops |

| Energy efficiency | 39.38 GFlops/W | 29.70 GFlops/W | 26.04 GFlops/W | 21.11 GFlops/W |

| Green500 ranking | #1 | #1 | #2 | #1 |

Green500 certificate for November 2021

PFN plans to expand MN-Core-powered computer clusters in multiple phases. The first of these, MN-3a, was completed with the following configuration in May 2020.

MN-3a is made up of 1.5 “zones,” each of which consists of 32 compute nodes (MN-Core Servers) tightly coupled by two MN-Core DirectConnect Switches.

Configuration of the MN-3a cluster:

- 48 compute nodes (MN-Core Servers)

- Network between MN-Core servers

- MN-Core DirectConnect (interconnect developed specifically for MN-Core processors)

- 5 100GbE Ethernet

Configuration of each MN-3a node:

MN-Core Server

| MN-Core | MN-Core Board x 4 |

| CPU | Intel Xeon 8260M two-way (48 physical cores) |

| Memory | 384GB DDR4 |

| Storage Class Memory | 3TB Intel Optane DC Persistent Memory |

| Network |

MN-Core DirectConnect (112Gbps) x 2 Mellanox ConnectX-6 (100GbE) x 2 On board (10GbE) x 2 |

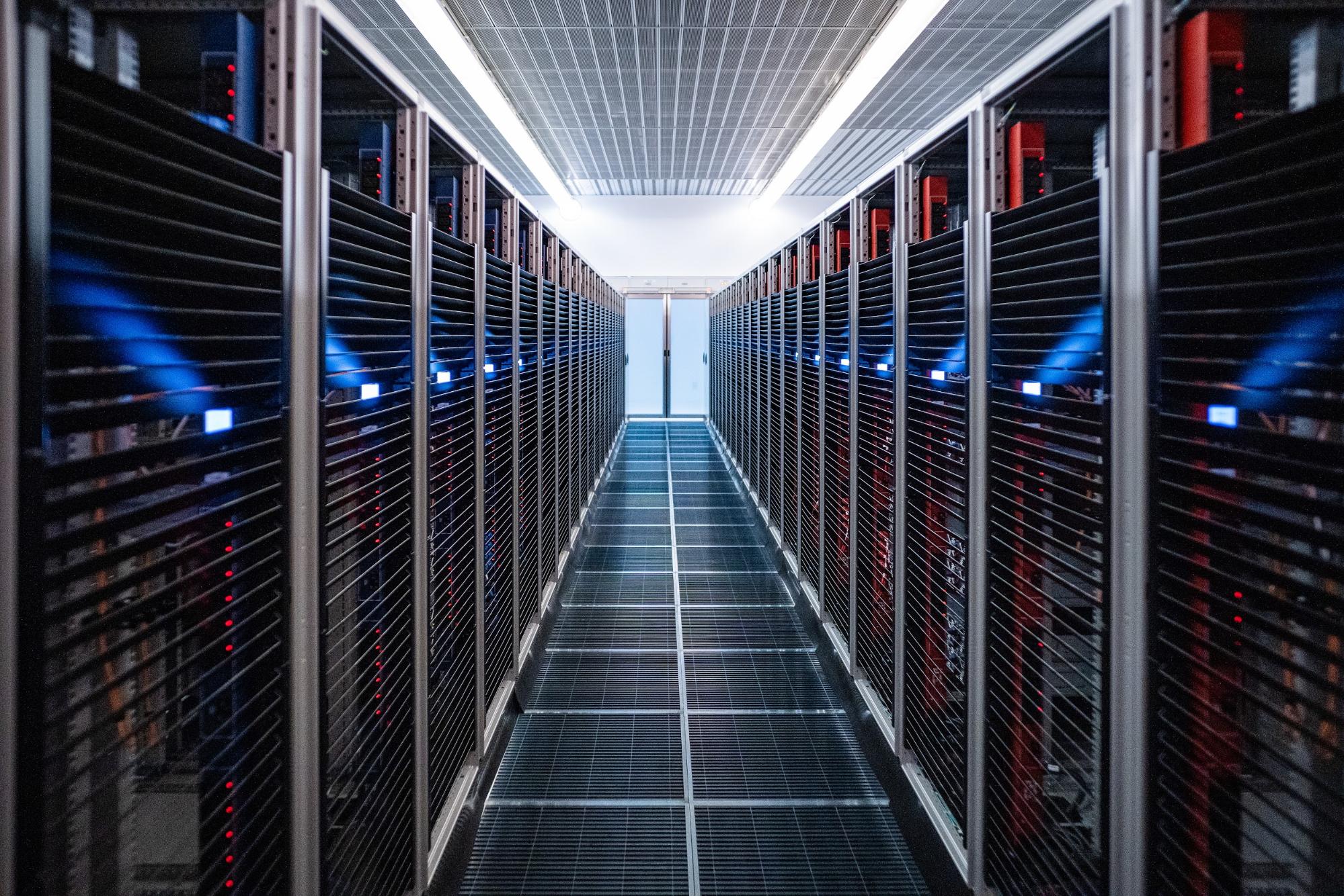

MN-2

MN-2 is the first GPU cluster built and managed solely by PFN, Operating since July 2019.

Specifications of the MN-2 cluster:

- 128 GPU servers (compute nodes)

- 32 CPU servers (compute nodes)

- 24 storage servers

- 18 100GBE Ethernet Switches

Specifications of each compute node on MN-2:

GPU server

| GPU | NVIDIA V100 SXM x 8 |

| CPU | Intel Xeon 6254 two-way (36 physical cores) |

| Memory | 384GB DDR4 |

| Network |

Mellanox ConnectX-4 (100GbE) x 4 On board (10GbE) x 2 |

CPU server

| CPU | Intel Xeon 6254 two-way (36 physical cores) |

| Memory | 384GB DDR4 |

| Network |

Mellanox ConnectX-4(100GbE) x 2 On board(10GbE) x 2 |

MN-1, MN-1b

MN-1 is a GPU computer cluster that NTT Communications operates exclusively for PFN. The MN-1 cluster has two generations: MN-1 (operating since September 2017) and MN-1b (operated between July 2018 and July 2021).

The configuration of each MN-1 cluster is as follows:

- MN-1 128 GPU servers (NVIDIA P100 x 8, FDR 56Gbps InfiniBand × 2)

- MN-1b 64 GPU servers (NVIDIA V100 x 8, EDR 100Gbps InfiniBand × 2)

MN-1 milestones:

- Preferred Networks achieved the world’s fastest training time in deep learning

- Extremely Large Minibatch SGD: Training ResNet-50 on ImageNet in 15 Minutes

- Preferred Networks’ private supercomputer ranked first in the Japanese industrial supercomputers TOP 500 list

MN-1b milestones:

- Preferred Networks wins second place in the Google AI Open Images – Object Detection Track, competed with 454 teams

- PFDet: 2nd Place Solution to Open Images Challenge 2018 Object Detection Track

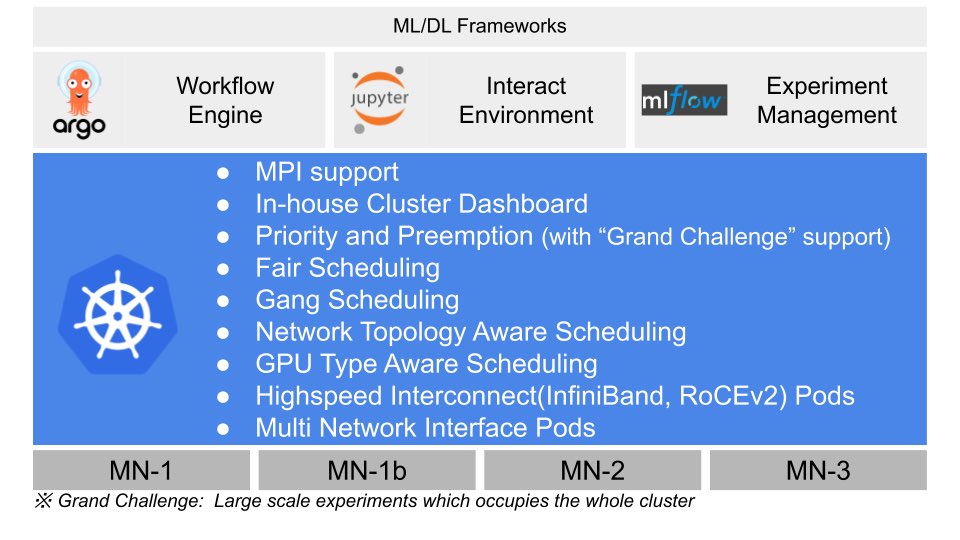

Middleware

PFN’s computer clusters use Kubernetes, an OSS for managing containerised applications, as core technology. This, in combination with PFN’s own schedulers and front-end tools, provides a computing platform for efficient machine learning and deep learning research.