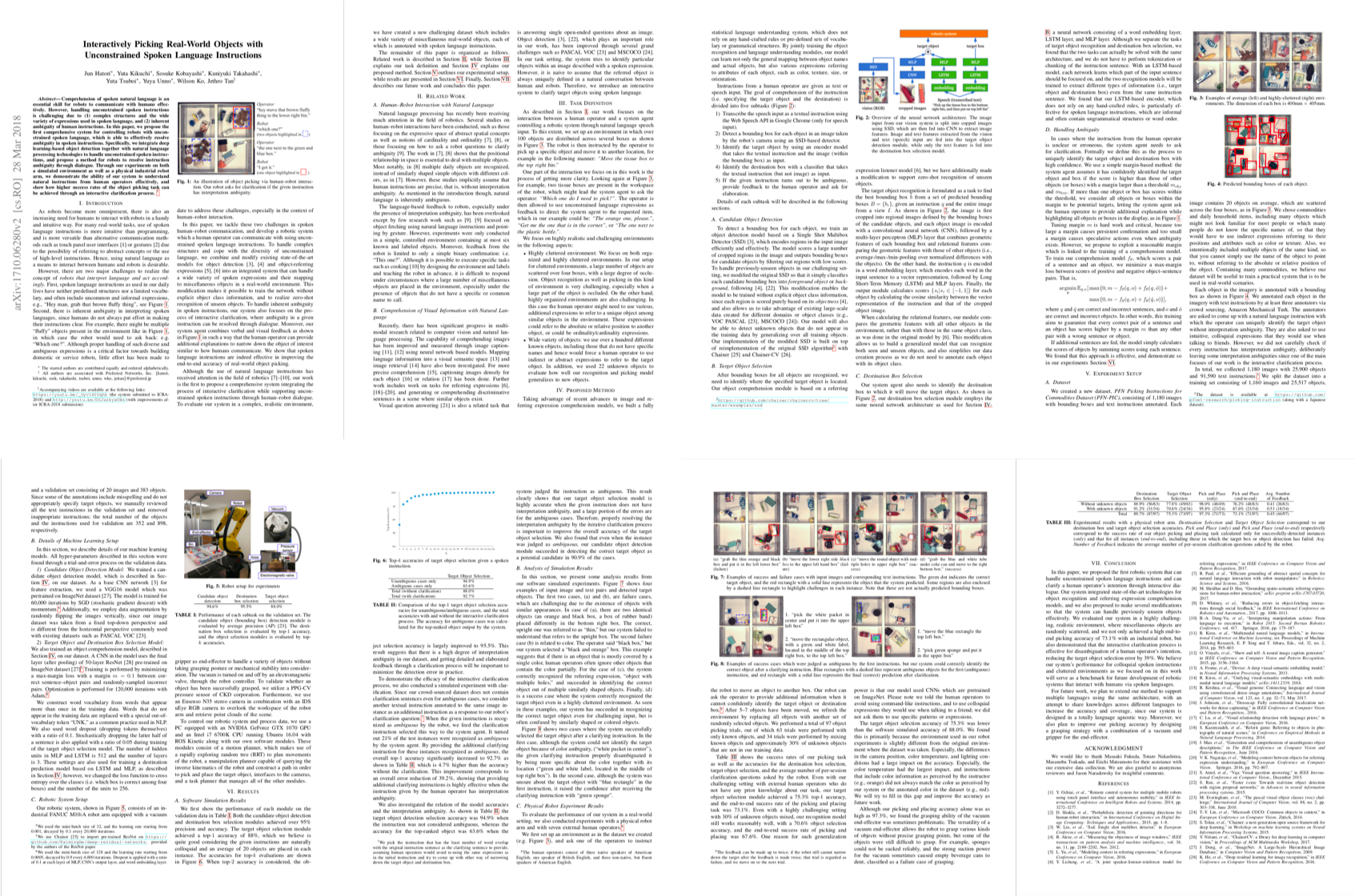

Overview

At Preferred Networks, we are applying the latest speech and natural language processing technologies as means of communication between humans and robots.

Our latest work has succeeded in building an interactive system to which you can use unconstrained spoken language instructions to operate a common object picking task.

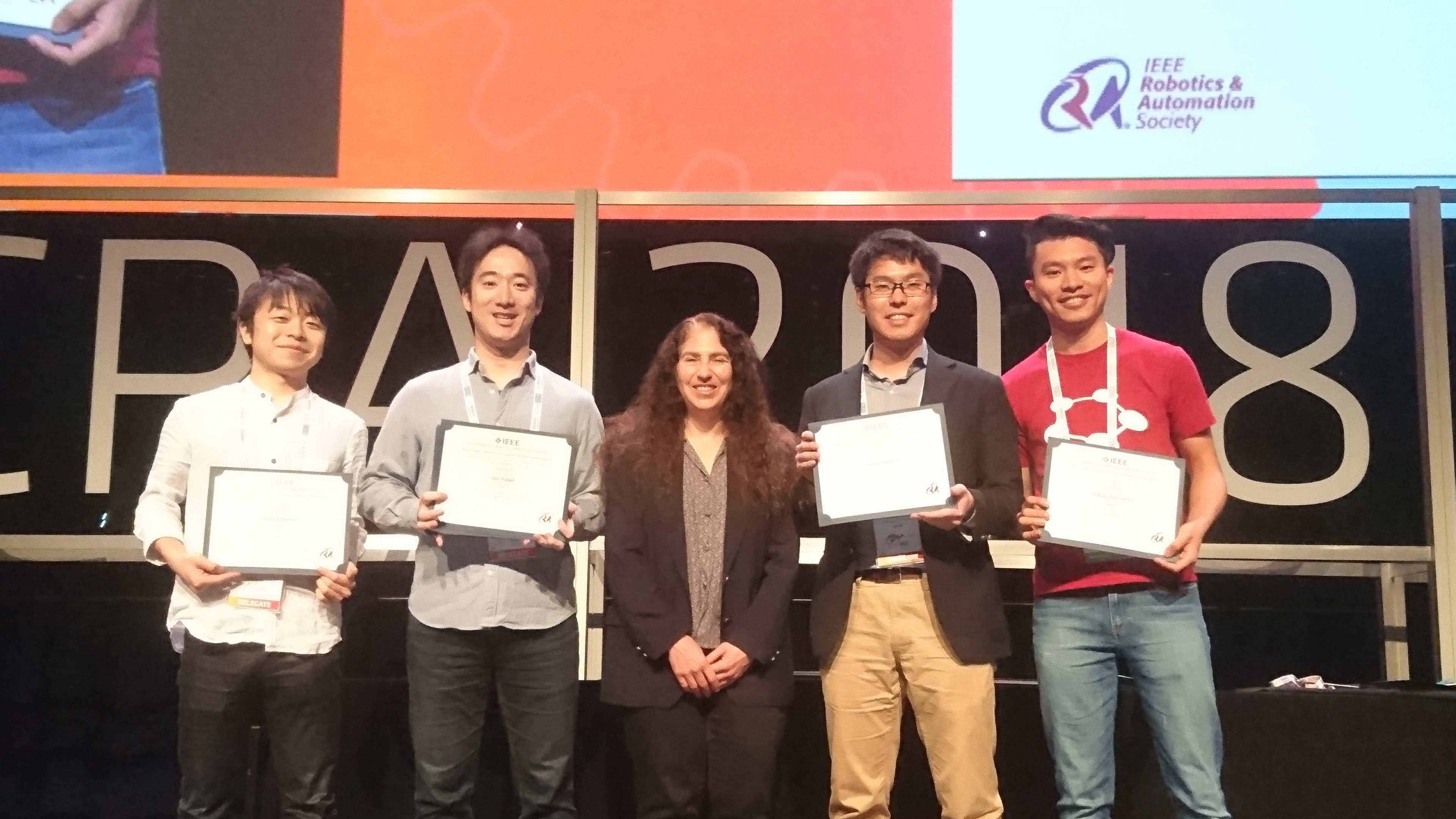

ICRA Best Paper Award on Human-Robot Interaction (HRI).

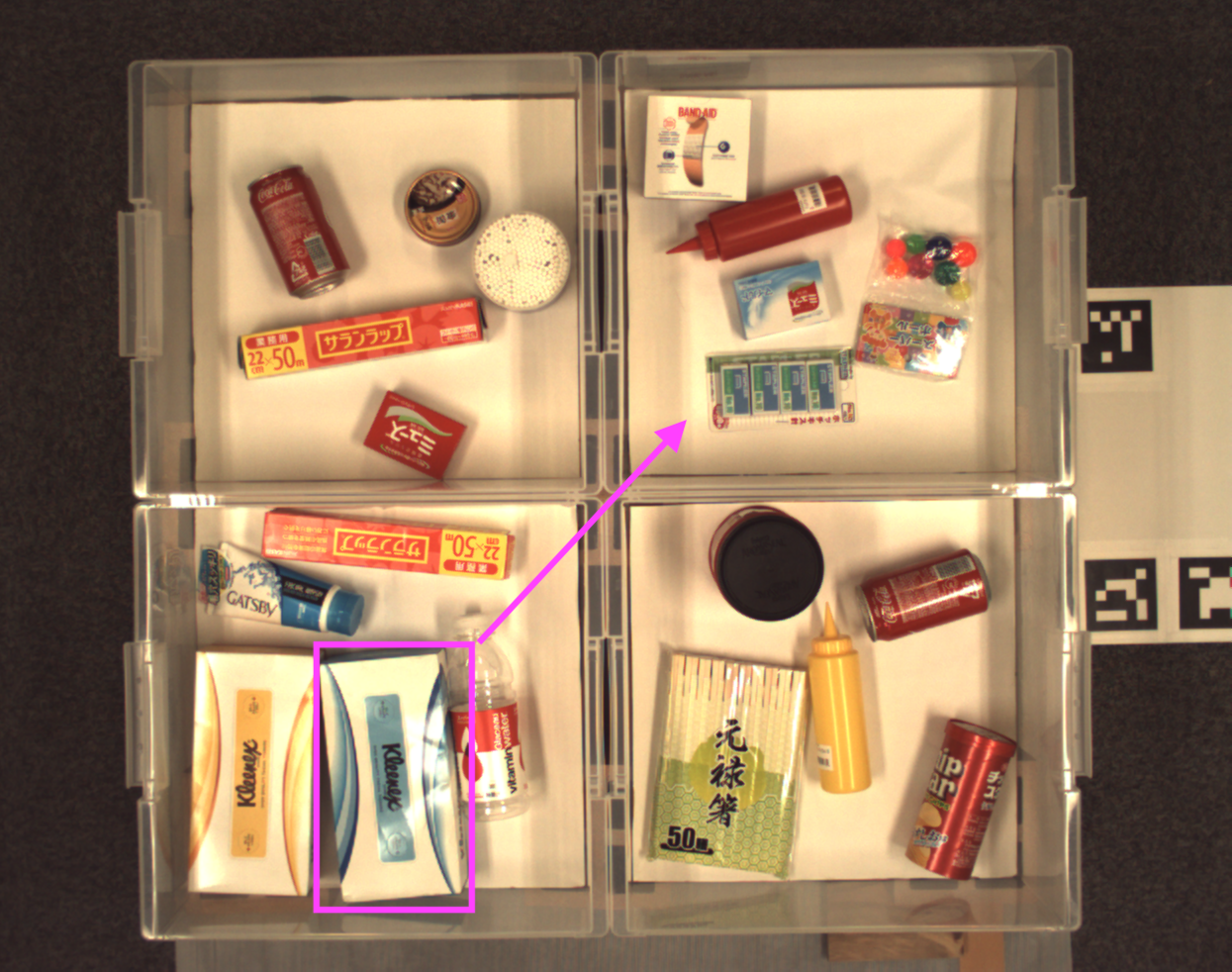

Dataset

PFN Picking Instructions for Commodities Dataset (PFN-PIC)

This dataset is a collection of spoken language instructions for a robotic system to pick and place common objects. Text instructions and corresponding object images are provided.

We consider a situation where the robot is instructed by the operator to pick up a specific object and move it to another location:

For example, Move the blue and white tissue box to the top right bin.

This dataset consists of RGBD images, bounding box annotations, destination box annotations, and text instructions.

Citation

(The first 6 authors are contributed equally and ordered alphabetically.)

View PaperJun Hatori, Yuta Kikuchi, Sosuke Kobayashi, Kuniyuki Takahashi, Yuta Tsuboi, Yuya Unno, Wilson Ko, Jethro Tan. Interactively Picking Real-World Objects with Unconstrained Spoken Language Instructions, Proceedings of International Conference on Robotics and Automation (ICRA2018), 2018.

Bibtex

@inproceedings{interact_picking18,

title={Interactively Picking Real-World Objects with Unconstrained Spoken Language Instructions},

author={Hatori, Jun and Kikuchi, Yuta and Kobayashi, Sosuke and Takahashi, Kuniyuki and Tsuboi, Yuta and Unno, Yuya and Ko, Wilson and Tan, Jethro},

booktitle={Proceedings of International Conference on Robotics and Automation},

year={2018}

}